WHAT IS WHIZ?

Whiz operation at Aeon Mall, Tokyo Japan

Leveraging the A.I navigation system, used to automate the preceding large floor scrubbers, the task was to design and develop a commercial vacuum, that is much smaller and could operate in dynamic commercial environments. This b2b vacuum is Whiz.

Whiz was publicly announced in Japan, the initial target market, in winter 2018 and sales opened during February of 2019.

Whiz falls under the “Robotics as a Service” (RaaS) model, where the robots are leased and comprehensive data like cleaning session reports and navigation maps can be shared with the customer and their fleet of Whiz robots .

WHY WHIZ? PROBLEM STATEMENTS

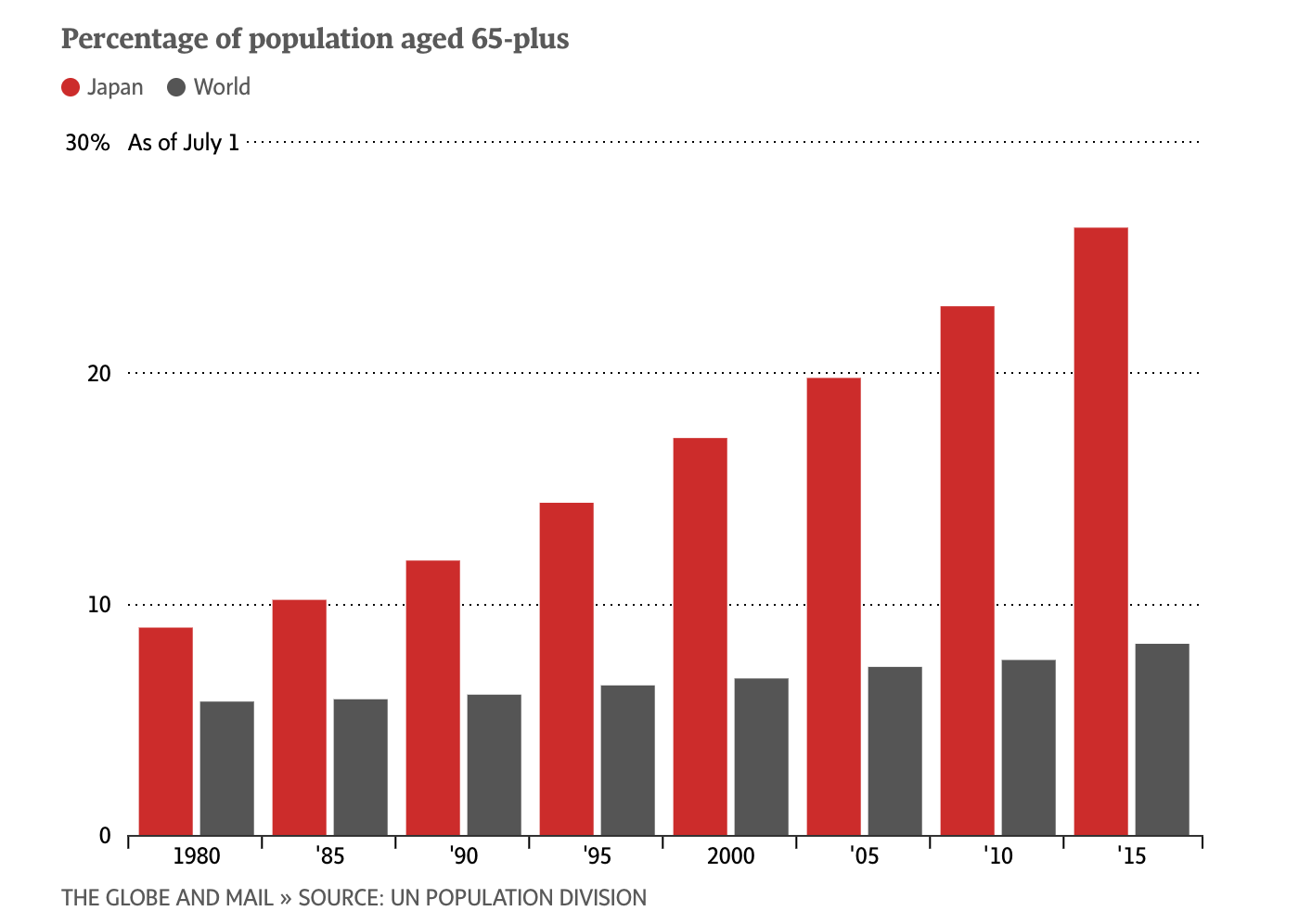

With a declining population and Japan’s elderly (defined as 65+) accounting for 28.1% of the population, many industries, like the janitorial/sanitation one, are experiencing operating strains, due to a diminishing labor force. Companies, facilities management and cleaning service contractors have trouble keeping up with a business’s sanitation needs and janitors experience an extensive list of tasks to complete in a rigid working window (3-5hrs).

In Japan, a dominant percentage of janitorial professionals are middle aged - senior, older people transition to cleaning as pre-retirement and in many cases retirees continue working. With the majority workforce being older, cleaning tasks, such as vacuuming can be both time consuming and physically arduous.

—

Design and develop a robotic solution to aid in a task that:

1) Businesses have a labor shortage in fulfilling and 2) are physically demanding and time consuming, for a predominantly aging work group.

WHO WILL BUILD, NEED & USE WHIZ?

First tier users: Janitors, cleaning industry professionals, facilities workers, managers, cleaning contract companies and businesses that lack cleaning staff.

Second tier users: Manufacturer/assembly, deployment, training staff.

MY ROLES

Lead UX Research & Testing

Market Research

Lead Human : Robot Interaction Design

Write product feature specs

Hardware UX (Ergonomics, movement, environment functionality, visual design & communication, performance)

Software UX (Navigation, error states/communication, autonomy vs manual functions, cloud : robot data sync and robot:robot information architecture.)

Lead UI Design Direction (architecture, visual design 80%, development (QT) 10%)

The design and core system and electrical engineering along with the navigation software development was conducted in the United States, the initial target market, product management and additional UX research was in Japan. Manufacturing along with some of the mechanical design took place in China.

SCOPE AND CONSTRAINTS

Project collaboration across 3 countries. 🇺🇸🇯🇵🇨🇳

SW, design (ux/ui/mecanical/engineering), development in USA. Manufacturing/mechanical design in China. Product ownership in Japan.UX design, research, testing across 3 countries 🇺🇸🇯🇵🇨🇳

Constrained timeline 1.5 years (whiteboard to hard tooling)

Target market, cultural challenges. How janitorial work is socially structured/perceived in Japan is very different from USA.

Localization & translations.

Design & research, for an elder populous, that are mostly not fluent in english and have limited robotic/technical knowledge.

WHIZ, FROM WHITEBOARD TO PRODUCTION. THE DESIGN JOURNEY.

Environment observations to cultural interviews, sketches, cardboard models, 3d printed bodies, ergonomic testing, lighting studies, factory studies, deployment analysis, interface designs (HW/SW), customer visits, workflows and so much more took place over the course of 1.5 years. Though each portion of the design journey could warrant a multitude of case studies, highlighted here will be the most crucial and prominent milestones.

Target Market & Competitive Research

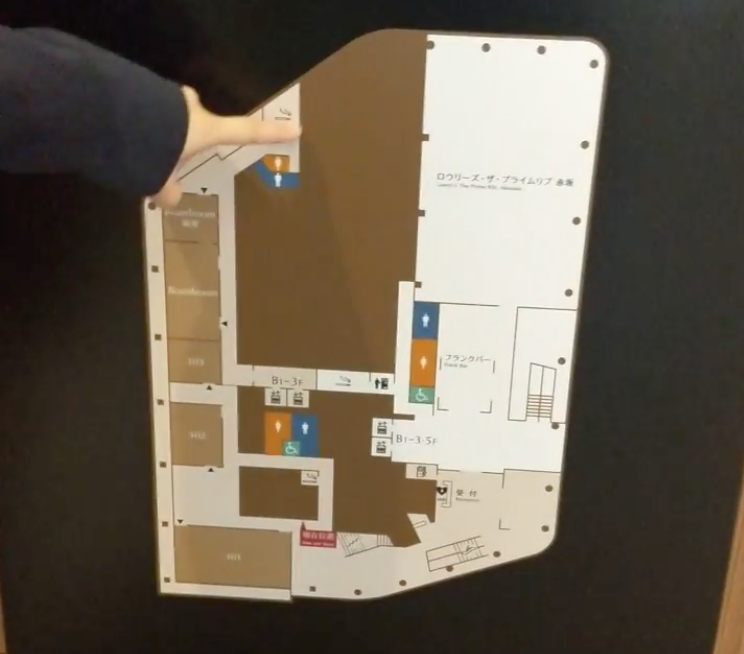

Prior to defining the specifications of the vacuum, target environment (corporate buildings & offices) research was needed to understand navigation and functional requirements. I shot 360 video, so that development teams, could view each location and navigate through them, utilizing VR and see technical challenges near firsthand. Cleaning area and aisle measurements helped to define the diameter of the robot. Viewing and understanding the complexity of the spaces, confirmed the our prediction that having a near circular robot, is needed for rotation, reversing and exiting tight corners.

Interviews were conducted with customers of competitive vacuum robots (Amano, Makita etc.) to understand usage, limitations, functionality, preferences, pain points and purchasing reasons.

Following market, environment and customer research, I conducted UX/technical tear-downs of consumer vacuum cleaners to document performance and navigation capabilities to record the performance metrics, that customers expressed priority in. These included: Cleaning coverage, battery life, cleanliness test (distribute a controlled volume/type of debris and using each robot in default mode, to vacuum), out of box set up time, assists or errors and recovery.

This research established a baseline hypothesis, surrounding the specifications needed for a robot vacuum operate successfully in a commercial environment and provide value against current technologies being used.

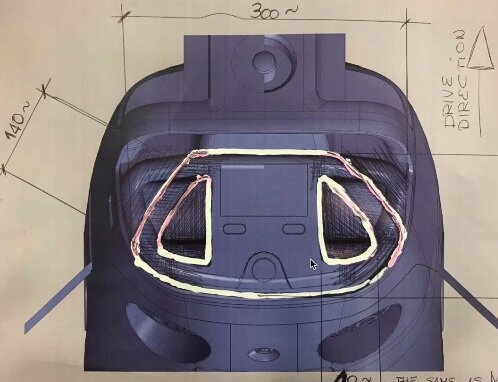

Concept Design

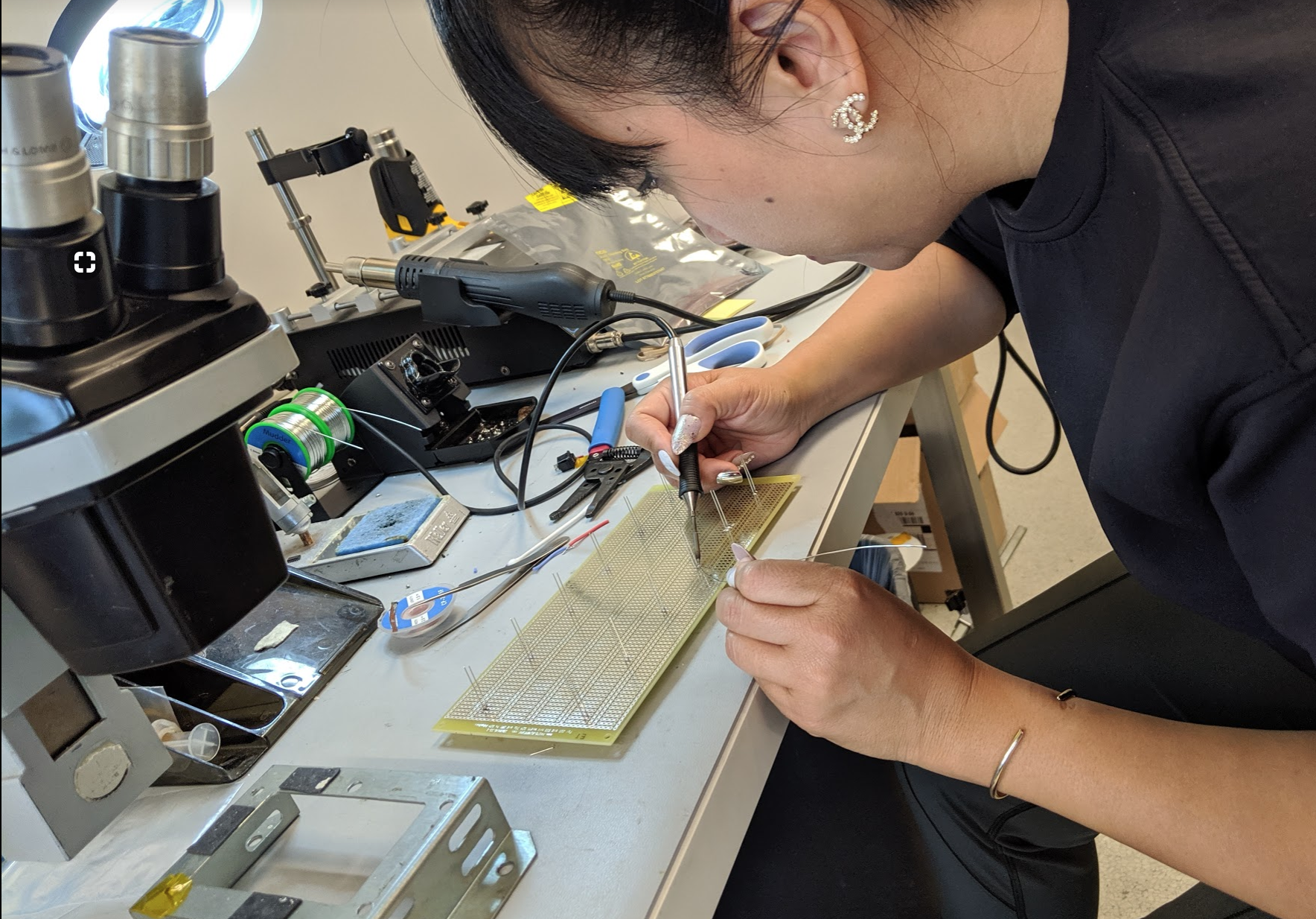

Collaboration between the teams in all 3 countries were crucial during this step. White boarding sketches and paper prototypes became 3d renders and eventually 3d/foam prints on a mechanically functional chassis. My core UX role here was to evaluate, inventory and mitigate each mechanical design decision that would impact janitor workflow, functional performance, impacts on the navigation software or compromises safety.

Other contributions to this phase included human to robot interfacing decisions such as, which functional buttons to make hardware vs software and location of primary robot controls (emergency stop, power, vacuum power and an autonomy go/stop button). The testing and research lead to creating operational standards like, always having the robot initiate autonomy moving away from a user and having physical buttons that start and stop the machine during autonomy, so that a person does not compromise safety, in efforts of tapping a UI to control the robot.

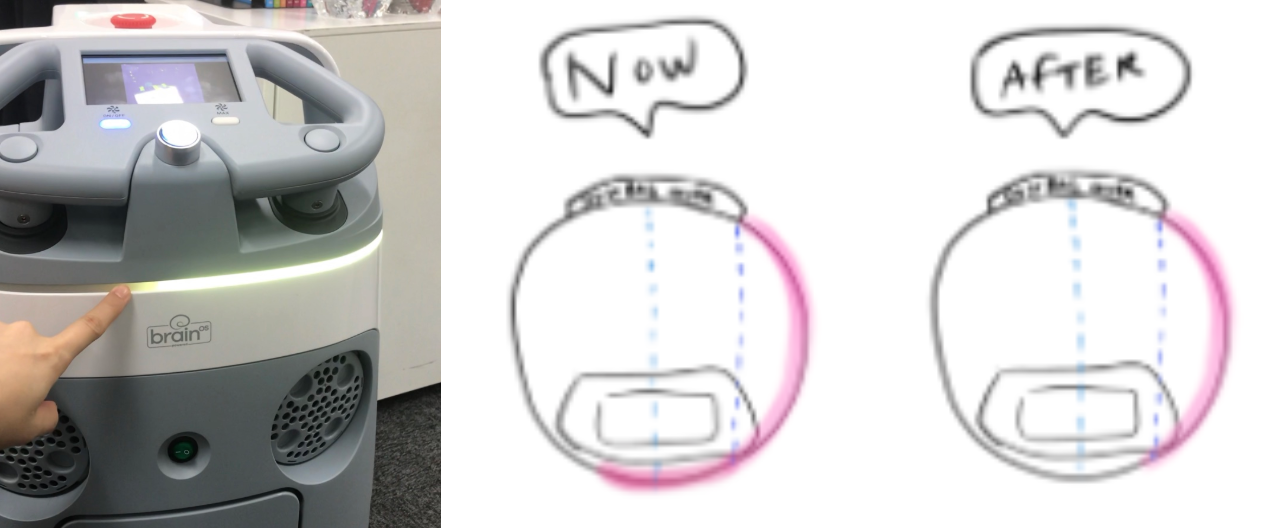

Lights and Colors Study

Human to robot and robot to environment communication is paramount for the functionality, integration and adoption of the technology.

Early testing and research confirmed the following:

Without looking at the LCD, people need to understand the robot’s directional intent and operational status (autonomy, paused, assist or error).

Due to the smaller stature of the robot, being able to locate the robot easily is necessary (peripheral lighting reflection)

Any lighting solution we use, would have to be robust and flexible enough to change and modify as the intelligent navigation software evolves.

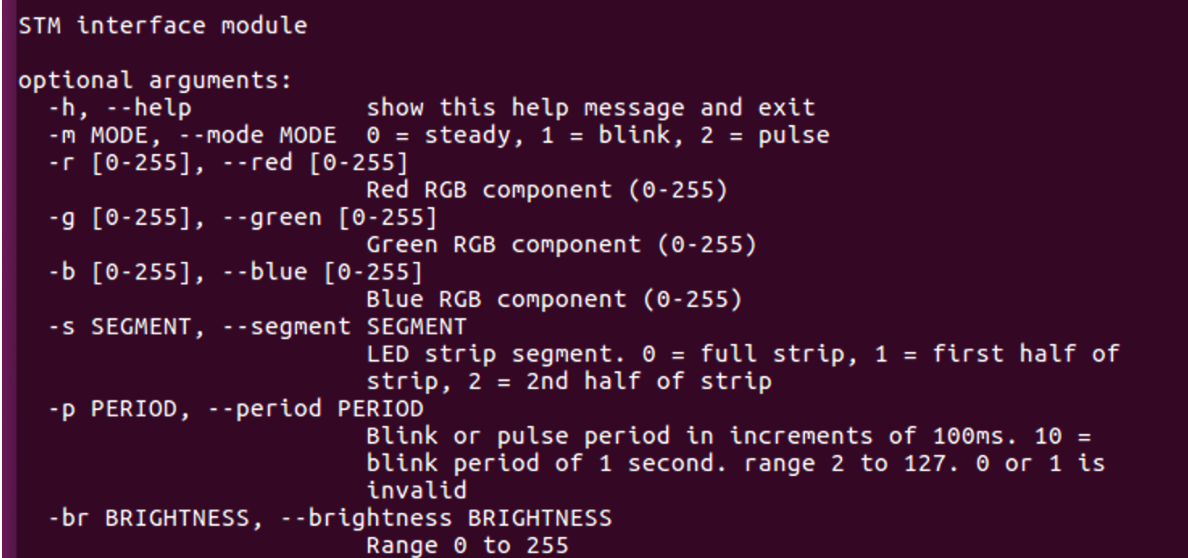

Solution selected: integrated RGB LED strip.

By mocking an LED strip, to the machine, I was able to write simple python strings to change the color, brightness and pattern, to conduct user testing.

Sample test conducted:

Color baseline test: Show the base colors of the machine operation and for each color, test in different scenarios, if the individual can see the light and if the correct color registers. (red, green, blue, amber, white, black)

Scenario 1: side peripheral in a dimmed room. Scenerio 2: side peripheral fully lit room.

Scenerio 3: 50° angle dimmed room Scenerio 4: 50° angle fully lit room.

*control variable: show the same color baseline 10 m in front of user, to verify that they can see the correct color.Color operational test. Controlling the tempo and pattern of the colors that were visible to the users, they were asked to associate each combination, with a given function (autonomous motion, paused, emergency, assist/errors, power on/off, idle).

Directional intent. In a dimmed room and fully lit room, while the robot was moving autonomously, users were asked to state the function of what they saw (left blink, right blink or reversing).

Conclusion: Varying color hues, saturations, brightness and patterns were selected for the operational specifications of the robot. Ex. Solid blue for autonomy, pulsing blue for paused, binary i/o red for emergency, pulsing red for assist, amber sectional blink for direction, rear sectional solid white for reverse and solid white for idle.

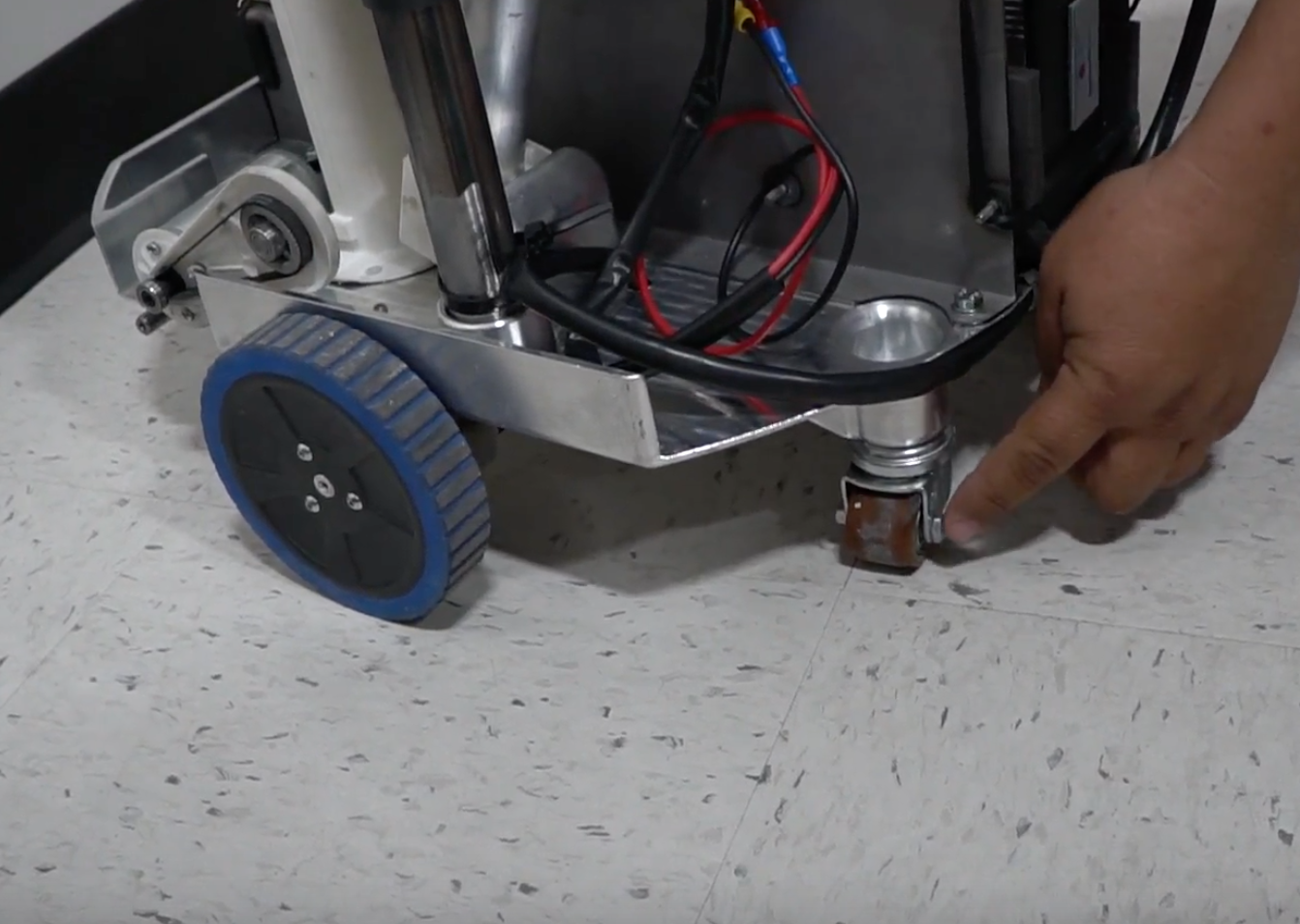

Ergonomic Testing

Ergonomic and mechanical usability tests were performed during each iteration of vacuum unit (p0, p1, p2). Integral functions like pushing the robot over varying floor surfaces, carrying the machine and carrying batteries, were tested to evaluate maneuverability and ease of transport.

This testing data lead to numerous key UX enhancements:

The selection of caster wheels that had a larger opening, so debris would not jam the wheels.

The addition of tread to the larger wheels and a more rubbery material to a void wheel slippage on slick tile.

Defining the pushing handle pivot angle.

The addition of a top carrying handle, positioned in a way that two people could carry the machine safely.

Different 3d printed molds of handles were also tested to evaluate different holding methods and which would provide ideal grip and require minimal effort, for long term pushing.

Workflows

After the series of ergonomic and usability evaluations, autonomy navigation and vacuum functions were able to be validated with users, together as a unit, extensively during the p3, DVT and EVT phases. These phases were fundamental to developing working and environment workflows with our users.

Workflow goals:

Mitigate and minimize disruption to a janitor’s work life.

Evaluate and help our customers establish best practices for integrating a robotic vacuum, into the workplace.

Onboard a new user, on how to use the robotic vacuum, within 18 min (mapping, autonomy and vacuum functions).

Integrate physical workflow with software workflow.

Time studies on the janitor’s tasks, during working hours, helped to define battery performance needs, charging station locations, ideal placements for Aruco codes (used to localize the robot), cleaning speeds and notification methods to alert the janitor of job complete or assists needed.

A USER’S WINDOW INTO WHIZ - THE UI

Designing the interfacing channels between the robot and people, were always my favorite aspects. The visuals and sounds, communicating intent or status and the channel that revealed the most information to the user, the display UI. The UI is especially important because, in addition to understanding status and functions on the robot, it’s also a method for a person to instruct the robot on its tasks.

Initial UI design and testing, were conducted by building interactive phone prototypes and mechanically mounting them to the handle. I tested using small to medium devices (iPod touch, Nexus 5, Samsung Galaxy), because the target production LCD is similar in size. This was useful and key to quickly validating and iterating workflows, messaging and UI features, prior to loading designs to test on robot, which was a more difficult task.

UI DESIGN/ARCHITECTURE TESTING HIGHLIGHTS

Are the complexities of the mapping and navigation software, simplified and eased for the user?

Can users differentiate between the core robot modes, mapping and autonomy?

Despite being a robot, does the whole machine act/appear as a vacuum first?

Can a user initiate autonomy? Can a user pause/cancel it?

When the robot needs help, can users understand the assist messaging and how to help?

At any given time, can users get information on the a task progress?

Does the user have and understand the robot’s life statuses (battery, connectivity, operational)?

Can the user navigate within the UI seamlessly?

WHIZ IN THE WILD

This phase in the design journey came full circle to the initial market research and environment observations, but with a production robot instead of a prototype. From EOL testing to unboxing and activation, I aided in quantifying tasks to evaluate efficiency and ease of process. Ongoing visits to customer sites and documenting the initial deployments were crucial in providing insight to the engineering team on feature prioritizations and highlighting any edge case issues.

As a designer, there are few things as rewarding as seeing your work in the hands of people and being able improve on a product from ongoing research and feedback.

Whiz, launched in Japan earlier this year, is now selling worldwide (USA, Europe, Asia (China, HK, Singapore)) and will be supporting 7 languages.

Went from a whiteboard concept to mass manufacturing, with thousands of robots in the wild.

Winner of ISSA INNOVATION AWARD and the Good Design Award.

Design enhancements from preceding robot, increased navigation performance (turning capability, backwards motion) and better assist handling for users (error animations, path recovery ), LED lighting for environment/user communication.

Users learn to use the robot within 15 minutes.

Mass media coverage.

Bloomberg

Businesswire

Robotics Business

Bend Bulletin

Non project related supporting articles for problem statements:

https://www.forbes.com/sites/japan/2018/11/12/why-japans-aging-population-is-an-investment-opportunity/#4e78069e288d

https://www.theglobeandmail.com/globe-investor/retirement/retire-planning/how-japan-is-coping-with-a-rapidly-aging-population/article27259703/

https://www.japantimes.co.jp/life/2015/12/19/lifestyle/growing-old-gracefully-senior-citizens-workplace/#.Xen9i5NKj_Q

https://www.bloomberg.com/news/articles/2012-08-30/in-japan-retirees-go-on-working

https://www.japantimes.co.jp/news/2019/05/23/national/social-issues/septuagenarian-job-hunters-become-japans-new-normal/#.Xen9rpNKj_Q

CREDITS:

Associate UI design - Nikola Simic

3D Animator/SFX Artist - Jimmy Kim

Associate Tokyo UX designer - Norika Kato

Mechanical Design - Gianfranco Ghiraduzzi

Brain Corp official site

Whiz Softbank Robotics official site